Video Capture

Last updated March 2015. Contributor: mcasas@

Overview

There are several components allowing for Video Capture in Chrome

- the Browser-side classes

- the common Render infrastructure supporting all MediaStream use cases

- the local <video> playback and/or Jingle/WebRtc PeerConnection implementation.

At the highest level, both Video and Audio capture is abstracted as a MediaStream; this JS entity is created via calls to GetUserMedia() with the appropriate bag of parameters. What happens under the hood and what classes do what is the topic of this document. I'll speak about Video Capture although some concepts are interchangeable with Audio. I'll speak of WebCam/Camera but the same reasoning applies to Video Digitizers, Virtual WebCams etc.

Browser

In the Cr sandbox model, all of what pertains to using hardware is abstracted in the Browser process, to which Render clients direct requests via IPC mechanisms, and Video Capture is no different.

The goal of this set of classes is to enumerate, open and close devices for capture, process captured frames, and keep accountancy of underlying resources.

At the Browser level there should be no device-specific code, that is, no non-singletons, created before the user first tries to enumerate or use any kind of capture (Video or Audio) device -- and that's the reason why "Video Capture Device Capabilities" under chrome://media-internals is empty before actually using any capture device.

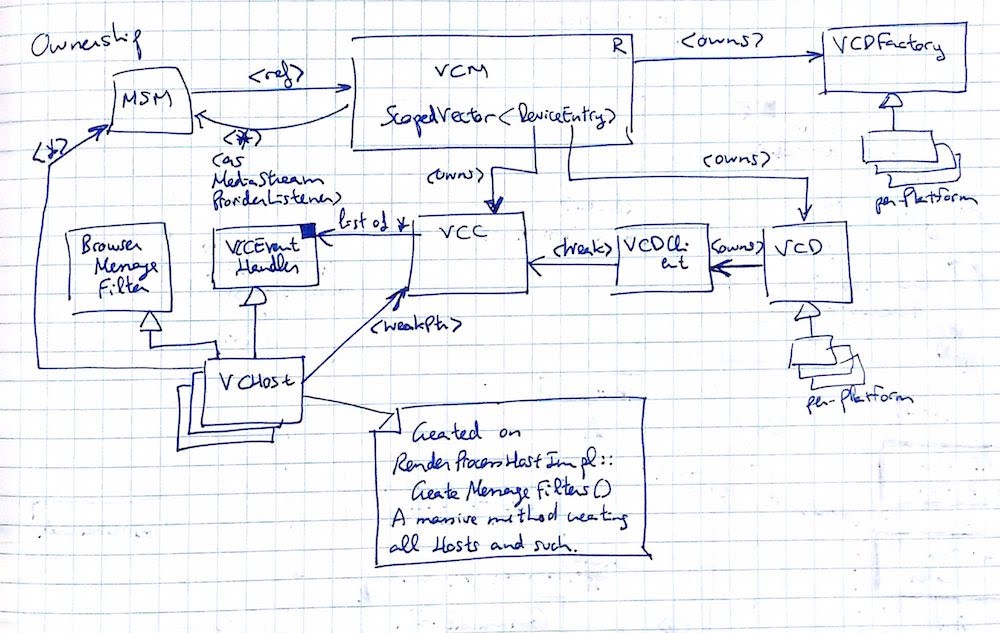

Let's have a look at the diagram. In the early Chrome startup times there'd be just one class, MediaStreamManager (MSM) sitting lonely on the upper left corner. MSM is a singleton class created and owned by BrowserMainLoop on UI thread, using a bunch of MediaStream* classes to have conversations over IPC and to request the user for permissions (MediaStreamUI*). MS* classes deal with both Audio and Video.

MSM creates on construction two other important singletons, namely the ref-counted VideoCaptureManager (VCM) and the associated VideoCaptureDeviceFactory (VCDF). VCDFactory is used to enumerated Device names and their capabilities, if they have any, and to instantiate VCDevices. There's one implementation per-platform and there are a few available:

- Platform specific: Linux/CrOS (based on V4L2 API), Mac (both QTKit and AVFoundation APIs), Windows (both DirectShow and Media Foundation APIs) and Android (Camera and Camera2 APIs).

- Debug/Test devices: FakeVCD and FileVCD. FakeVCD produces a stream with a rolling PacMan over a green canvas, while FileVCD plays uncompressed Y4M files.

- Other captures: Desktop and Tab (Web contents) Capture.

VCM manages the lifetime of pairs <VideoCaptureController, VideoCaptureDevice>. VideoCaptureDevices (VCD) are adaptation layers between whichever infrastructure is provided by the OS for Video Capture and VideoCaptureController (VCC), whose mission is to bridge captured frames towards Render clients through the IPC.

VCC interacts with the Render side code via a list of VideoCaptureHosts (VCH). VCH is a dual citizenship bridge between commands coming from Render to VCM (VideoCaptureHostMsg_Start and relatives) and those flowing from VCC towards Renderer. There are as many VCHosts as Render clients and they are created by RenderProcessHostImpl, another high level entity.

Large data chunks cannot be passed through the IPC pipes, so a Shared Memory mechanism is implemented in VideoCaptureBufferPool (VCBP -- not in the diagram, lives inside VCC). These ShMem-based "Buffers", as they are confusingly called, are allocated on demand and reserved for Producer or Consumers. VCC keeps accountancy of those and recycles them when all clients have sent the corresponding release message.

VideoFrames do not understand Buffers from this Pool so VCC has to keep count of them and recycle its uses via, again, IPC.

It's relatively common that the Capture Devices do not provide exactly what the Render side requests or needs, hence an adaptation layer is inserted between VCC and VCD: VideoCaptureDeviceClient. VCDClient adapts sizes, applies rotations and otherwise converts the incoming pixel format to YUV420, which is the global transport pixel format. The output of such conversion rig is a ref-counted VideoFrame, which is the generic video transport class. The totality of VCDs assume a synchronous capture callback, hence VCDC copies/converts the captured buffer onto the ShMem allocated as a "Buffer". This VideoFrame-Buffer couple is then replicated to each VCHost on VCController.

(For the curious, IPC listener classes derive from BrowserMessageFilter).

Device Monitoring?

Common Render Infra

Local <video> Playback

Remote WebRTC/Jingle encode and send